PDFSage Inc

AI-Powered PDF Tools & Revolutionary Model Pipelines

Legal & Product Info

Welcome to PDFSage Inc. Our first quality PDF-related product has been minimally launched, but it’s already disgustingly powerful. Our flexible data structures and forward-looking architecture ensure we can keep enhancing our AI-based capabilities indefinitely.

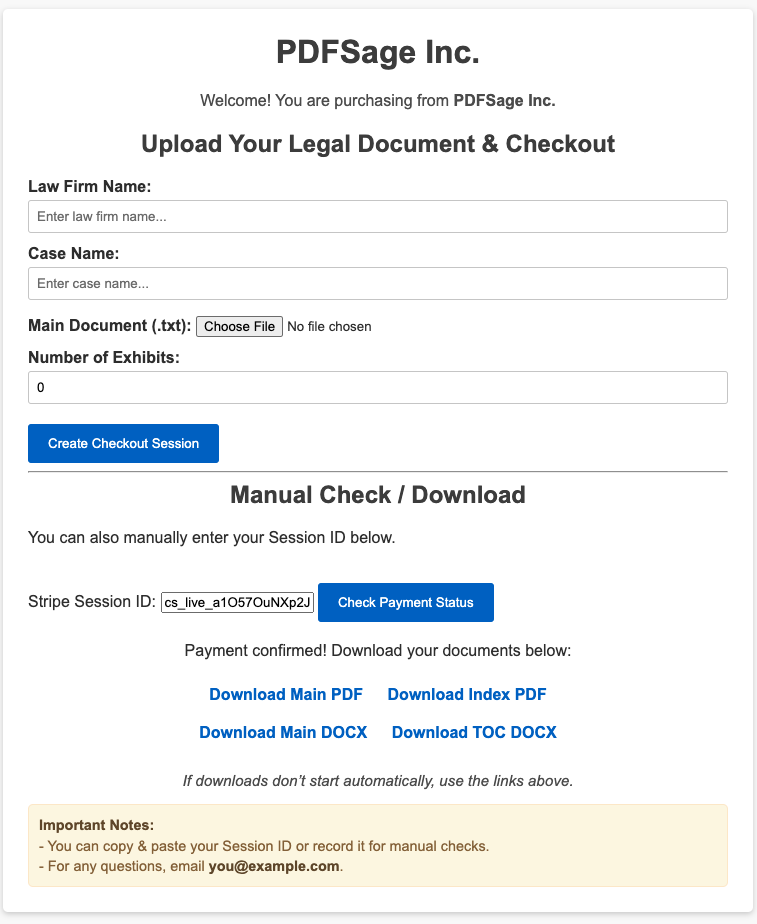

In addition, our roadmap includes robust customer support features for the near future. Presently, we offer two ways for you to try or purchase:

- Paid Version (99¢) – Stores your data, includes support, and continuous AI enhancements (with upcoming expansions to AGI-level intelligence and specialized solutions for law firms). It automatically generates complete legal and table-of-contents PDFs, plus corresponding DOCX documents:

- Free Trial – Doesn’t store data and has no support. Ideal for basic testing:

You may be a lawyer who wants to use this service anyways, especially after adding whatever capabiliites you want. Alternatively, if you're Pro Se, you may visit https://www.deepseek.com/ and use model DeekSeek-V1 which is roughly as capable as model o1 from OpenAI, or you may visit https://www.chatgpt.com to use model o1 pro after subscribing for $200 a month.

While the $20 a month sub is still useful since it offers the tiny o3-mini-high model that packs a punch above its weight class (model 4o-mini hallucinated like every single thing when I was trying to use it to sue DMH, while incarcerated and thus not allowed by the MA gov to access payment information, a feat repeated onto me by Amazon Prime), model DeekSeek V1 has for most tasks offered a very competitive alternative to model o1.

While I've used model o1 pro for 50% of my needs and model o3-mini-high for the other 50%, I used DeepSeek while I only had a $20 sub thanks to Amazon Prime's stolen Pixel 7A, and OpenAI limits the number of model o1 prompts on the $20 sub, unlike unlimited for both model o1 pro and model o1 on the $200 sub.

Anyways... DeekSeek V1 was able to take the first baby generation of a single next word for Exploit LLM and turn it into a truly first baby generative model that generates intelligently up until the max token length set in a python script. Then I happened to use model o3-mini-high which told me to save the tokenizer and sub-word tokenizer to file, early in main() which allowed for infinite inference runs without re-training.

My original point was that while model o1 and DeepSeek V1 share many similar limitations, model o1 does run on more tasks from my experience, while DeepSeek limited its max-token length or something more, resulting in 'time outs'.

PDFSage besides Exploit LLM, has developed the best theoretically possible and most theoretically efficient possible StupidityModel which is able to tell the difference between the use of hate crime language and the intelligent use of related sentiment embeddings, such as 'black people'.

If you've ever been censored for using the 'black' word on social media, it's because these tech giants don't know how to design basic small & efficient models! Using the same bert-base-uncased base model, PDFSage has also developed PersonalModel which, unlike the sentiment(negative, neutral, positive) dimensions and toxicity(toxic, non-toxic) dimensions, simply uses user_preference(love, hate) dimensions to automatically retrain itself to adapt to user preferences perfectly!

Additionally PDFSage has developed SageFlower, a model which for now generates 512x512 totally unique high fidelity flowers, after stealing all the flower images from Google Search etc. But eventually PDFSage will make this model mind blowing in capabilities.

PDFSage has developed numerous useful TFLite models and will continue to improve and develop them. When implemented into SagePDFFramework or other platforms, Firebase even lets developers upload models to their storage, then front-end users download on demand to reduce app size (I heard Apple charges a lot for SSD).

ANYWAYS, I could easily (it'll take like 30 minutes to code?) model the heristics as well as decent but not foolproof quality checks to automate the acquisiton process of lawsuit.txt for you, and I expect a decent effort may cause my API chip stack to go down by $2 on OpenAI's API, while a superior effort would make me lose $4 in chips.

By using any of the above, you acknowledge that this is an early-stage product and that new features and improvements will be introduced over time. Any stored data is handled with security in mind, but please refer to our future guidelines and documentation for updated privacy and compliance details.

Thank you for choosing PDFSage Inc. We appreciate your trust and aim to deliver the best PDF workflow and AI-based solutions possible.

PDFSage's Model Pipeline

Explore our growing family of advanced AI models:

TFLite Suite

Optimized TensorFlow Lite models for on-device performance, ready for integration and continuous updates.

Exploit LLM

An experimental large language model designed for robust text generation and heuristic-driven exploitation tasks.

SageFlower

Generates 512x512 high fidelity floral imagery using a specialized dataset – with more visionary expansions on the horizon.

StupidityModel

Distinguishes hateful usage of sensitive words from contextually acceptable usage. Built on bert-base-uncased for robust semantic insights.

PersonalModel

Adapts to your individual preferences ("love" vs. "hate"). Also based on bert-base-uncased, retraining itself with minimal user data.

Test the New Models

StupidityModel

PersonalModel Classification

PersonalModel Feedback

All services and products are provided "as is" without any guarantee. Under no circumstances shall PDFSage Inc be liable for any direct, indirect, or consequential loss arising from the use of our services.